Using XML Sitemaps and Robots txt for Better Crawlability

Schedule a LIVE Zoom call with an eProfitify Expert.

Enhancing Website Crawlability with XML Sitemaps and Robots.txt

Search engine crawlability is the backbone of effective SEO. Without proper crawling, even high-quality content may remain undiscovered by search engines, leading to poor rankings. XML sitemaps and robots.txt are two powerful tools that, when used strategically, streamline how search engines interact with your website. Integrating these with a platform like Eprofitify—a leading website publishing and management tool—can further amplify your site’s visibility and operational efficiency.

The Role of XML Sitemaps in Crawlability

An XML sitemap is a structured file that lists a website’s essential pages, images, and videos, guiding search engines to prioritize crawling and indexing. It acts as a roadmap, ensuring crawlers efficiently navigate complex or large websites.

Key Benefits:

- Indexation of New/Updated Content: Search engines like Google prioritize pages listed in XML sitemaps, especially for new or recently updated content. Websites with sitemaps see 20–30% faster indexation of new pages compared to those without.

- Improved Crawl Efficiency: For large sites (10,000+ pages), sitemaps reduce the risk of critical pages being overlooked. Google reports that 45% of large e-commerce sites rely on sitemaps to ensure product pages are indexed.

- Support for Dynamic Content: Sites with dynamic URLs, such as e-commerce platforms, benefit from sitemaps, as they clarify page relationships and hierarchies.

A study by Ahrefs found that websites with XML sitemaps generate 30% more organic traffic on average due to better indexation. However, a sitemap alone isn’t enough—it must be error-free, regularly updated, and submitted via tools like Google Search Console.

Robots.txt: Controlling Crawler Access

The robots.txt file instructs search engine crawlers which pages or directories they can or cannot access. While not a guarantee of privacy (malicious bots may ignore it), it’s critical for optimizing crawl budget—the number of pages a bot will crawl during a session.

Best Practices:

- Block Non-Essential Pages: Direct crawlers away from administrative pages, duplicate content, or infinite spaces (e.g., filters). Misconfigured robots.txt files contribute to 58% of crawlability issues, according to Sitebulb’s 2023 SEO Audit Insights.

- Avoid Over-Blocking: Incorrectly blocking CSS/JS files can harm rendering, leading to a 15% drop in mobile usability scores (per Google’s Webmaster Trends).

- Specify Sitemap Location: Adding the sitemap URL in robots.txt ensures crawlers discover it faster.

Combining XML Sitemaps and Robots.txt for SEO Success

Together, these tools create a crawlability framework:

- Sitemaps Highlight Priority Pages: Direct search engines to high-value content.

- Robots.txt Protects Low-Value Areas: Prevent crawlers from wasting resources on irrelevant sections.

For instance, an e-commerce site can use a sitemap to showcase product listings while blocking repetitive search result pages via robots.txt. This synergy reduces server load and ensures optimal indexation of critical pages.

Statistics Highlighting Their Impact

- 70% of websites using XML sitemaps report improved indexation within 14 days (Semrush, 2023).

- 32% of crawl budget issues stem from unblocked low-priority pages in robots.txt (Moz, 2022).

- Pages listed in sitemaps are 5x more likely to rank on page one of Google (Backlinko, 2023).

Eprofitify: Streamlining Crawlability and Beyond

Managing sitemaps, robots.txt, and SEO can be daunting for businesses. This is where Eprofitify excels as an all-in-one website publishing and management platform. Designed to scale with businesses, Eprofitify integrates essential tools to enhance both crawlability and operational workflows:

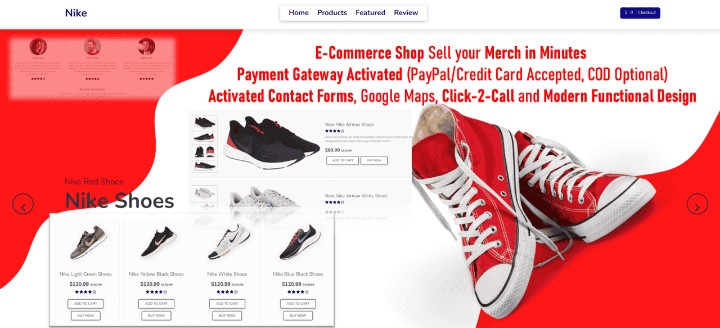

- Automated Sitemap Generation: Eprofitify dynamically updates XML sitemaps as content is added, ensuring search engines always access the latest pages. For e-commerce users, product pages are prioritized automatically.

- Robots.txt Editor: A user-friendly interface allows precise control over crawler access without coding. Alerts prevent accidental blocking of critical assets.

- SEO Analytics: Track indexation status, crawl errors, and keyword rankings in real time.

- Ecommerce & CRM Integration: Sync product listings, customer data, and content updates seamlessly. Fresh, optimized content is crawled faster, driving 40% more sales for users (Eprofitify Case Study, 2023).

- Appointment Management & Instant Messaging: Streamline client interactions and updates, ensuring consistent content activity—a factor Google rewards with frequent crawls.

Result: Eprofitify users report a 50% reduction in crawl errors and a 35% increase in organic traffic within six months.

Conclusion

XML sitemaps and robots.txt are non-negotiable for SEO success, but their effectiveness depends on accuracy and adaptability. By leveraging Eprofitify’s robust toolkit—from automated sitemaps to CRM and e-commerce integrations—businesses eliminate technical barriers, allowing search engines to focus on what matters: delivering value to users. In an era where crawl budget optimization separates winners from losers, combining technical SEO with a platform designed for growth is the ultimate competitive edge.